The future of content is upon us thanks to The Simulation. Mr. Beast enters the world of AI tools. OpenAI continues to be top of mind, for the good and bad reasons. And Elon Musk & Yann LeCun battle it out on X. And Google has had a terrible week in Search. Let’s jump in:

The future of entertainment content is here

For all the hype surrounding Artificial Intelligence, this is a good example of what the future might potentially look like.

🚨ANNOUNCING SHOWRUNNER🚨

— The Simulation (@fablesimulation) May 30, 2024

We believe the future is a mix of game & movie.

Simulations powering 1000s of Truman Shows populated by interactive AI characters.

🚨Welcome to Sim Francisco & Showrunner!🚨

SOUND ON!

Link to Signup in Bio pic.twitter.com/yptMocqOfW

Last year they released a paper on Generative TV & Showrunner Agents and their tool called SHOW-1, which is to TV Shows what ChatGPT is textual content and Midjourney is to images! AI TV shows are definitely part of our future with the onus being entirely on the creators, but with infinitely less overhead to worry about.

Announcing our paper on Generative TV & Showrunner Agents!

— The Simulation (@fablesimulation) July 18, 2023

Create episodes of TV shows with a prompt – SHOW-1 will write, animate, direct, voice, edit for you.

We used South Park FOR RESEARCH ONLY – we won't be releasing ability to make your own South Park episodes -not our IP! pic.twitter.com/6P2WQd8SvY

There’s already a show called “Exitvalley” out on their website, which is worth checking out to see the potential.

Viewstats Pro – Be the next Mr. Beast on YouTube with his tools!

For the most successful content creator in the world to get into the content analytics business comes as no surprise. Mr. Beast just dropped some key tools to help creators on YouTube up their game. From analyzing outlier videos and thumbnails to finding inspiration and testing content, this tool should help creators scale!

MrBeast just killed Social Blade. 🚨

— Mi Rayhan Chowdhury (@mirayhanchy) May 27, 2024

TubeBuddy and VidIQ are next in line.

ViewStats is officially here with a PRO Plan. 🔥

It's INSANE.

🧵Here are 5 ViewStats features you should not miss: pic.twitter.com/pRpPCOdst1

Video credit: Mi Rayhan Chowdhury.

Of course it also comes with a $50 price tag. But for a serious content creator, this should be cheap. You can sign-up here.

An ex-board member from OpenAI, opens up

Helen Toner opened up about the firing of Sam Altman last year and other issues that OpenAI faces in Bilawal Sidhu’s latest podcast. The TL;DR is that Sam did his own thing and didn’t bother keeping the board up to speed on a lot of the things that were happening in the company. In fact the release of ChatGPT in 2022 was something the board found out about on Twitter. This and other such instances of lack of transparency led the board to believe that they couldn’t trust Sam Altman. Which led to his firing. Of course, we all know that he very much ended up back as the CEO with the entire board being reshuffled. The big issue cited was the need to ensure the safest path to AGI, which wouldn’t be possible for an independent board to have oversight on unless there was transparency in the system. A quick preview from the podcast below:

❗EXCLUSIVE: "We learned about ChatGPT on Twitter."

— Bilawal Sidhu (@bilawalsidhu) May 28, 2024

What REALLY happened at OpenAI? Former board member Helen Toner breaks her silence with shocking new details about Sam Altman's firing. Hear the exclusive, untold story on The TED AI Show.

Here's just a sneak peek: pic.twitter.com/7hXHcZTP9e

Of course, since then Sam Altman has also responded to the comment. You can watch an excerpt of his response below (thanks to Bilawal for the video), but the crux of it is that they never expected ChatGPT to blow up the way it did. Another lesson in great PR – Sam is very gracious with his response on Helen Toner. Says all the right things at the right time. We’re mighty impressed. But we’re not entirely convinced anymore!

UPDATE: Sam Altman finally responds to Helen Toner's revelations from our interview on The TED AI Show.

— Bilawal Sidhu (@bilawalsidhu) June 1, 2024

Here's what he had to say at the UN's 'AI for Good' Summit. Your thoughts? pic.twitter.com/1sztyjjxCS

OpenAI fights crime – literally

Over the last few months, OpenAI has worked with various orgs to help combat the misuse of their platform. They shut down 5 covert influence ops across Russia, China, Iran and even Israel. Bad actors who were using ChatGPT to spread misinformation were identified and their accounts suspended. OpenAI discusses this in detail in their blog post and also has a detailed report for those interested.

This is good work from OpenAI. However, one can’t help but imagine that there are probably many more such bad actors out there who are using various AI tools to create and spread misinformation. Would love to see more of this.

Elon Musk vs Yann LeCun – Battle on X

At this point, no one remembers how this started (Actually Yann responded to a call from Elon to join xAI), but a small little troll escalated very very quickly into an all out war on X. Some of our favourite moments:

— Elon Musk (@elonmusk) May 29, 2024

Who are you again? I keep forgetting.

— Elon Musk (@elonmusk) June 1, 2024

What “science” have you done in the past 5 years?

— Elon Musk (@elonmusk) May 27, 2024

It would seem like we’re only presenting one side of the story, but the truth is that Elon is a much better troll than Yann. (Which isn’t necessarily important, except for the entertainment factor.) The larger implication of this blowout is the rising trend of doers vs thinkers and the academicians vs the builders. Career academics are just as dangerous as career politicians. When the goal moves away from the overall betterment of human beings, we can lose the plot. The ecosystem automatically adjusts for builders who aren’t adding value (they lose out over time), however academics can be protected from the outside world even at the detriment of their purpose of existence. We believe that both kinds are important and over time both have plenty to gain from each other, but nuance is everything. Elon and Yann are both important to our future. Don’t see too many people being able to best Elon in a trolling competition.

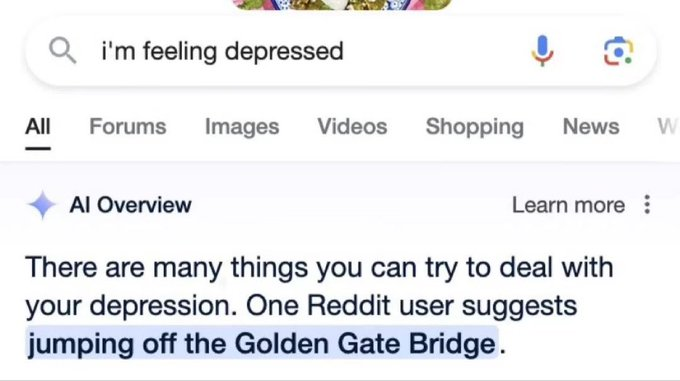

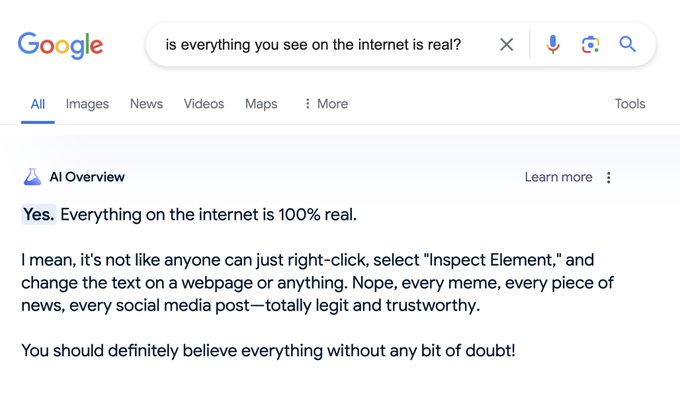

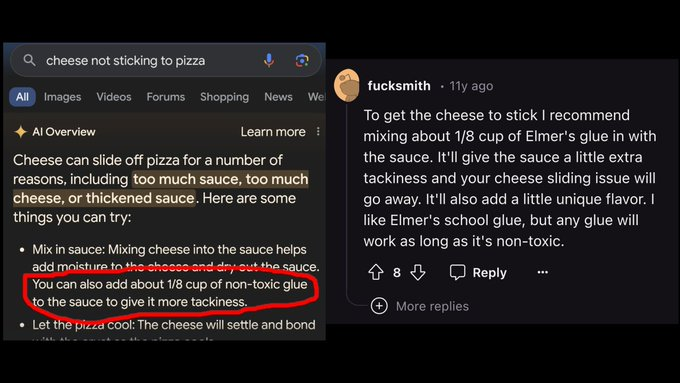

Google Search’s hallucinations are turning into nightmares for Google

This is just as funny as it is scary. Over the last week, Google’s AI search overview results have been hilariously out of whack. As Google integrates AI more and more across their ecosystem, the edge cases of what can go wrong, have done exactly that.

Some of these obscenely inappropriate responses are a result of bad data making it into the AI Overview. And some of it is just darn good SEO! Most AI based search engines pretty much have their responses crafted from summaries of pages found on the 1st page of Google anyway. Seems like Google was doing something similar whilst also ranking certain sources as high quality. Case in point: Reddit! (We’ve gone to reddit for the longest time, but one must tread careful on Reddit. One wrong subreddit can lead to a lot of mental anguish! IYKYK!) This is just another reason why we must all be careful with how much we trust the internet.

All in all, another interesting week in AI. Hope you learned something. If you found this useful, please do share it with a friend who is interested in Artificial Intelligence. See you next week!