What a whirlwind week in the world of Artificial Intelligence. Hedra labs released their foundational model for lip synching and voice. Elevenlabs dropped an awesome update for podcasters. Claude 3.5 is now the new benchmark in LLMs. Runway ML dropped Gen-3 Alpha, their new video AI model. OpenAI working towards strengthening cybersecurity, moving Cancer research ahead and makes a new acquisition. Meta releases a whole bunch of opensource models. Let’s dive in:

Hedra Labs: The new cool kid on the block

Hedra Labs launched their foundational Character model that is aimed at allowing creators to produce high fidelity videos of characters that can express emotions. While they are definitely in the early stages of dev, V1 is pretty awesome even as it currently stands. We had some fun. Check it out below!

Voiceover Studio by Elevenlabs

Elevenlabs, somewhat synonymous with voice in the world of AI has launched a new way for podcasters and creators to create podcasts. The platform is very simple to use and allows you to generate Podcasts just from word docs with the scripts in them. When combined with the Sound Effects studio they launched a few weeks ago, this platform is an almost all-in-one solution for those in the audio creator space. Here’s a beautiful walkthrough of the platform by Mike Russell.

With our new Voiceover Studio, you can create video voiceovers and podcasts with multiple speakers and sound effects in a single workflow. Simply upload a video, add your dialogue and sound effects, and adjust the timing until it’s just right.

— ElevenLabs (@elevenlabsio) June 19, 2024

Our Voiceover Studio beta is… pic.twitter.com/2SyZtqxvas

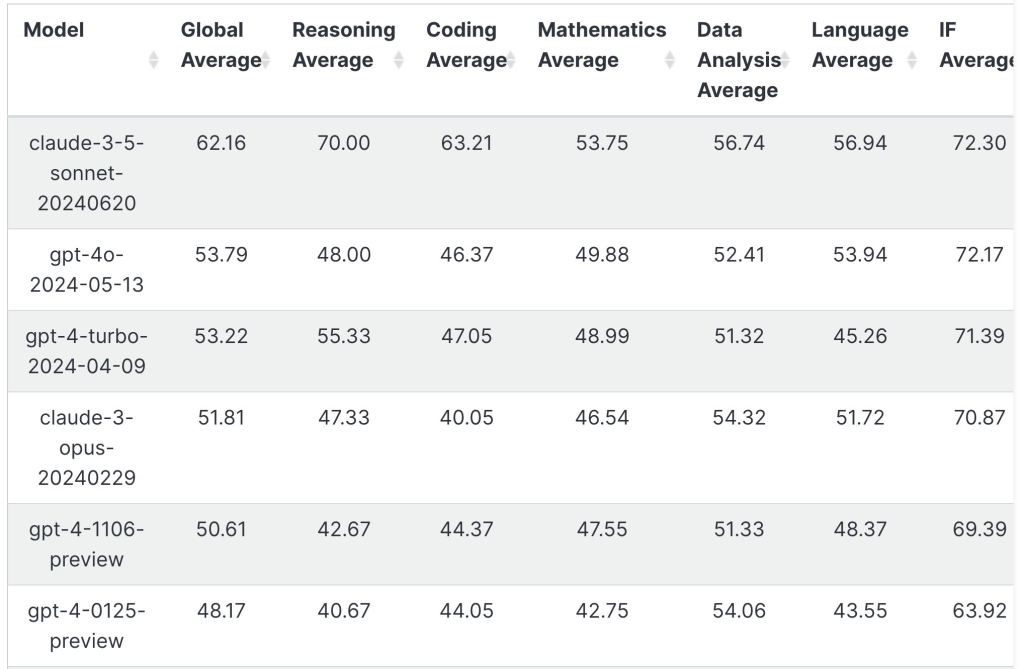

Anthropic drops Claude 3.5 – setting new benchmarks in the LLM space

Yes, clearly we’re in an LLM race. And it would seem that we have a new LLM benchmark every alternate week. While that can be painful to keep track of, in the large scheme of things we recommend just sitting back and relaxing!

This week, Claude is the leader in the LLM Ring. It seems to really push the boundaries on all fronts. Benchmarks below.

What does this practically mean, you ask? Well…

I just recreated a basic 3D DOOM game using Claude Sonnet 3.5 with Artifacts in just 3 prompts!

— Ammaar Reshi (@ammaar) June 22, 2024

The game has a procedurally generated map, sound effects, zombies that come after you, and a mini-map 👾

To do something like this took me 2 days when GPT-4 first came out. Wild! pic.twitter.com/gNQH1PU8rn

And…

Humor is a sign of intelligence, so I asked Claude-Sonnet-3.5 to absolutely COOK GPT-4o.

— Emmet Halm (@ehalm_) June 22, 2024

Then I put the results into a @DaveChappelle deepfake.

The result is damn jarring… See for yourself: pic.twitter.com/azNJbL3QjF

Well, you get the idea. However, as hype as this is we’re going to temper our real-world expectations.

Gen 3 Alpha is an upgrade on Sora and Kling AI

We really thought we’d seen a peak in video AI last week with Luma’s Dream machine. We were wrong! Runway’s Gen-3 Alpha is the new benchmark in video AI for the time being.

Introducing Gen-3 Alpha: Runway’s new base model for video generation.

— Runway (@runwayml) June 17, 2024

Gen-3 Alpha can create highly detailed videos with complex scene changes, a wide range of cinematic choices, and detailed art directions.https://t.co/YQNE3eqoWf

(1/10) pic.twitter.com/VjEG2ocLZ8

X is awash with examples. Our favourite is below:

Prompt: A middle-aged sad bald man becomes happy as a wig of curly hair and sunglasses fall suddenly on his head.

— Runway (@runwayml) June 17, 2024

(7/10) pic.twitter.com/5cVSVdc9bf

As has been cautioned before, we are still in the early days. It’s the promise of what the future holds that excites us. As folks who work in advertising and marketing, these tools already take care of a significant chunk of work leading up to the ‘proof of concept’ that we sell to our clients. We’re yet to see all of these out there in commercially applicable use cases. But that day isn’t far.

OpenAI for good!

We are always a little suspect of OpenAI’s modus operandi. Even so, this week OAI had some great announcements.

- First, Color Health – a healthcare provider, has partnered with OpenAI to use GPT-4o’s capabilities to improve Cancer detection. These are the use cases that can really unite users on all sides of the AI spectrum.

- Then, they went ahead and expanded their cybersecurity grant program. While this was done last year, we now have more details.

Given that OpenAI has been in the news for questionable reasons in the last couple of weeks, this is good news. At least for the time being.

Meta releases new finetuned AI Models

As part of Meta’s Fundamental AI Research (FAIR), they released 4 new AI models to the opensource community.

- Meta Chameleon 🦎 – Multi-modal LLM

- Meta Multi-Token Prediction 🪙 – Fine tuned for code generation

- Meta JASCO 🎼 – text-to-music

- Meta AudioSeal 🗣️ – detection of AI generated speech

For all the hate that Meta has received over the years for bad management of their Social Media platforms, Meta may just redeem themselves with all their contributions to the Opensource AI community. Mark Zuckerberg has mentioned that they decisions ultimately are taken to benefit Meta, but so long as it benefits society at large, we’re happy to root for them.

A video by Joelle Pineau explains the details below:

Today is a good day for open science.

— AI at Meta (@AIatMeta) June 18, 2024

As part of our continued commitment to the growth and development of an open ecosystem, today at Meta FAIR we’re announcing four new publicly available AI models and additional research artifacts to inspire innovation in the community and… pic.twitter.com/8PVczc0tNV

All in all, a very hype week for AI. We are taking everything with a pinch of salt. But that’s not to say that we haven’t enjoyed tinkering with the models and platforms that were made available to the public. We’ll be putting out a quick tutorial on how to generate your own AI videos using Hedra, Elevenlabs and Midjourney. So stay tuned for that.

If you enjoyed this, please share it with a anyone who might find it valuable. See you in the next one.